JAKARTA, cssmayo.com – Gesture Recognition: Bridging Human-Computer Interaction Through Intuitive Movement has honestly changed the way I see tech. When I first messed around with gesture-based apps, I wasn’t sure if waving my hand to control a device would ever feel natural. Spoiler: it totally does—once you nail the basics.

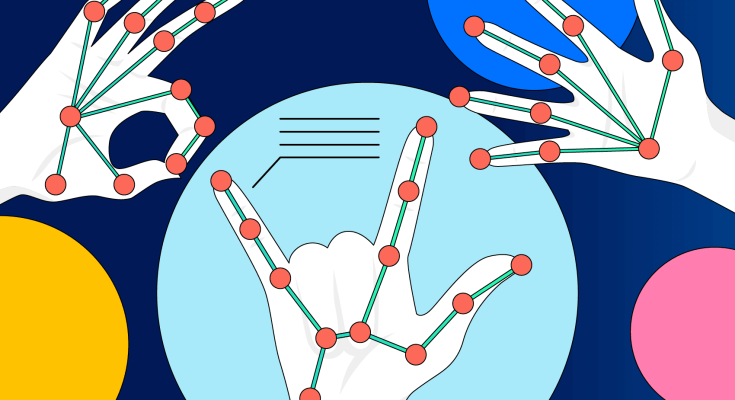

Imagine controlling your smart TV with a wave of your hand, navigating virtual environments by simply pointing, or assisting surgeons in the operating room through sterile hand gestures. Gesture Recognition is the technology that turns these scenarios into reality. By interpreting human body movements as input, Gesture Recognition redefines how we interact with machines—making interfaces more natural, accessible, and immersive.

What Is Gesture Recognition?

Gesture Recognition is a subfield of Human-Computer Interaction (HCI) and computer vision that detects and interprets human motions—such as hand waves, finger pinches, or full-body postures—and translates them into commands for digital systems. Unlike traditional input devices (keyboard, mouse, touchscreens), gesture-based interfaces rely on:

- Cameras and Depth Sensors (e.g., RGB-D cameras)

- Inertial Measurement Units (IMUs) in wearables

- Machine Learning Models to classify movement patterns

By combining sensor data with advanced algorithms, Gesture Recognition systems can map intuitive movements to application-specific actions.

How Gesture Recognition Works

- Data Acquisition

- RGB or infrared cameras capture frames at high frame rates.

- Depth sensors (e.g., Microsoft Kinect, Intel RealSense) record distance information.

- Wearable IMUs measure acceleration and orientation of limbs.

- Preprocessing & Feature Extraction

- Background subtraction and noise filtering isolate the user from the scene.

- Skeleton tracking algorithms identify key joint positions (e.g., hands, elbows, shoulders).

- Temporal features—velocity, acceleration, and joint trajectories—are computed to capture dynamic gestures.

- Gesture Classification

- Rule-based Methods: Define explicit thresholds on joint angles or motion speed.

- Machine Learning: Train classifiers (SVM, Random Forest) on labeled gesture datasets.

- Deep Learning: Use convolutional or recurrent neural networks (CNNs, LSTMs) to learn spatiotemporal patterns directly from sensor inputs.

- Action Mapping

- Recognized gestures are mapped to system commands (e.g., “swipe left” → “next slide”).

- Feedback (visual, haptic, or audio) confirms successful recognition.

Key Applications of Gesture Recognition

- Virtual and Augmented Reality

Immersive experiences rely on natural hand and body interactions for object manipulation, menu navigation, and environmental exploration. - Smart Home & Entertainment

Control lighting, temperature, and media playback through simple gestures—eliminating remote controls and touchscreens. - Automotive

In-car gesture controls let drivers adjust volume, answer calls, or change navigation views without taking hands off the wheel. - Healthcare & Rehabilitation

Surgeons can interact with medical images in sterile environments; physical therapists track patient progress through motion-based exercises. - Sign Language Interpretation

Translating sign language into text or speech bridges communication barriers for deaf and hoh communities.

Challenges in Gesture Recognition

- Variability Among Users

Differences in hand size, body proportions, and movement styles require robust models that generalize across populations. - Environmental Factors

Lighting changes, background clutter, and occlusions (e.g., one hand blocking another) can degrade sensor accuracy. - Real-Time Performance

Low-latency processing is critical for responsive interfaces—demanding optimized algorithms and hardware acceleration. - Ambiguity & Context

Similar gestures may have different meanings in distinct applications. Context-aware systems are needed to disambiguate user intent.

Future Trends and Innovations

- Multimodal Interaction

Combining gesture recognition with voice commands, facial expressions, and eye tracking for richer, context-aware interfaces. - Edge-AI Deployment

Running gesture models directly on cameras or embedded devices to reduce latency and protect user privacy. - Personalized Gesture Models

Adaptive systems that learn individual user styles over time, improving accuracy and user satisfaction. - Advanced Wearables

Smart gloves and AR glasses equipped with miniaturized sensors will enable more precise and unobtrusive gesture input. - Deep Learning Advancements

Transformer-based architectures and self-supervised learning promise more robust understanding of complex, continuous movements.

Conclusion

Gesture Recognition is transforming HCI by letting us interact with technology as naturally as we move our own bodies. From gaming and VR to healthcare and automotive controls, intuitive movement-based interfaces enhance accessibility, immersion, and efficiency. As sensors become more affordable and AI algorithms more powerful, Gesture Recognition will continue to bridge the gap between humans and machines—making digital interactions feel as seamless as real-world gestures.

Elevate Your Competence: Uncover Our Insights on Techno

Read Our Most Recent Article About Chatbots: Conversational AI Reshaping Interaction and Service!